DCP

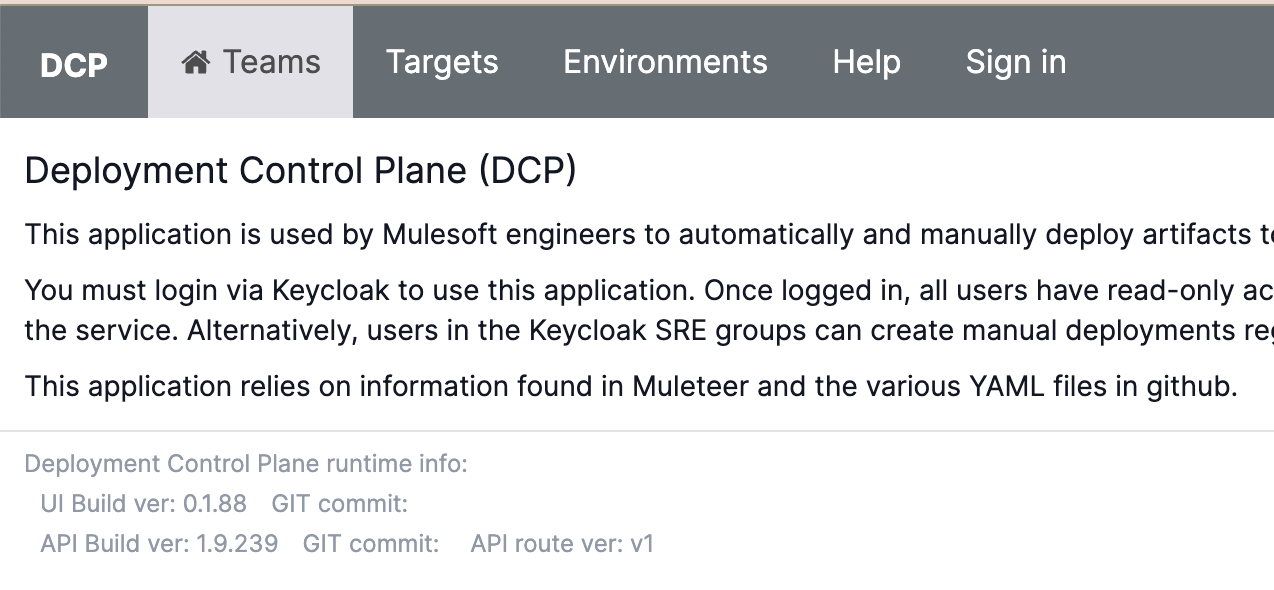

Deploy Systems is a team dedicated to building and operating services related to the deploy pipeline in the CI/CD for MuleSoft.

The primary service we work on is Deploy Control Plane (DCP). If you are interested in onboarding to the service, see the onboarding guide.

Connect

We would love to hear from you!

Message us 💬

Go to the #ms-prodeng-asks channel, and start a conversation with the Prod Eng Bot using the topic cpc or Jenkins: Deploy Job Failure

Submit work requests 📤

Create a work item in GUS

Escalate to us 🚨

Create a new incident in pagerduty

Last Updated: 2024-07-01T19:32:00+0000

Making Infrastructure changes in DCP

tf-deploy-systems-infra This repo manages:

- Administrative role to be used by Jenkins when applying changes to infrastructure.

- DNS Zones for deploy.msap.io and subdomains

- IAM resources copied from tf-k8s-core

- How To

EKS Cluster provisioning via Janus

- The linked documentation for "EKS Cluster privisioning via Janus" contains references and instructions for various prerequisite infrastructure configurations

Last Updated: 2024-07-01T19:32:00+0000

DCP Infrastructure Automation

Tier 0

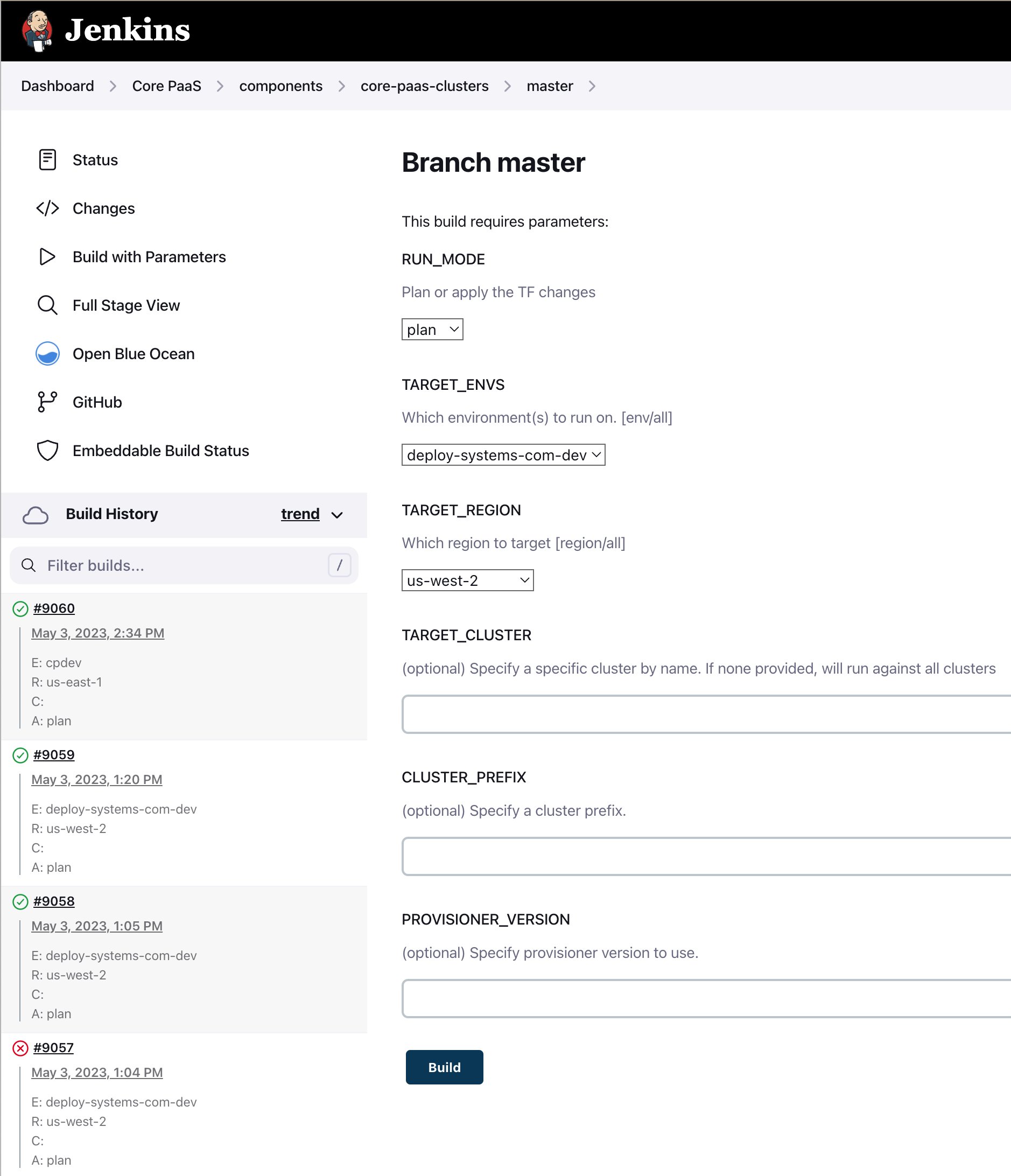

CorePaaS Clusters job runs the base automation for our EKS clusters

- Navigate to the

core-paas-clustersjob in Jenkins - Click on the

masterbranch - Click

Build With Parameters - If this is the first run, set the

RUN_MODEtoplan - Choose the proper

TARGET_ENVandTARGET_REGION

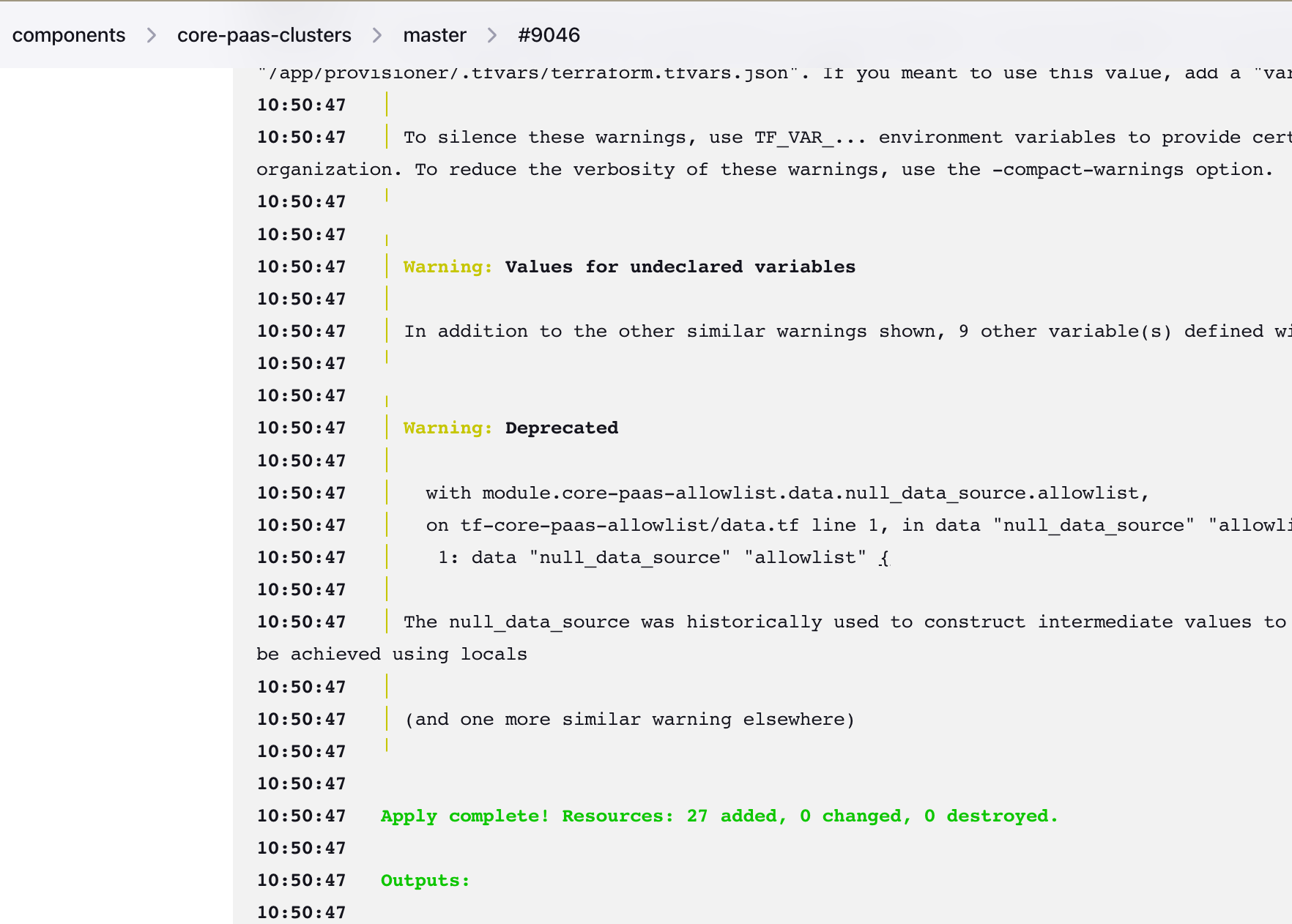

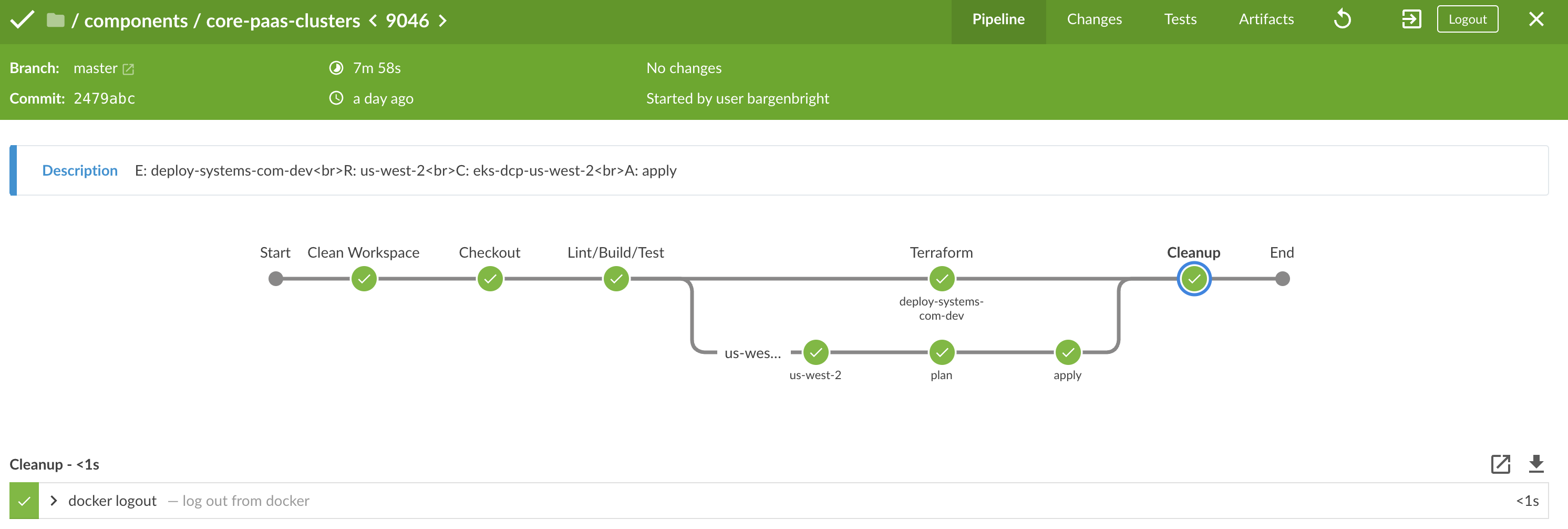

- Confirm the deploy was successful via the console logs or the BlueOcean UI

Console Logs

BlueOcean UI

Follow up tasks

Allowlist for core paas

The allowlist will need to be updated to add all of the elastic IP's from the new environment.

Allowlist for Github Enterprise

The allowlist for github enterprise will need to be updated in order to perform various git operations.

See #mulesoft-github-allow-list for more info.

Tier 1

CorePaaS Janus job runs the automation for our service dependencies (ArgoCD, Argo Workflow)

Last Updated: 2024-07-01T19:32:00+0000

DCP Technical Designs

This is an index of all technical designs, and various other solution oriented documents, most of which live in quip.

- Deploy Worker: https://salesforce.quip.com/rsXNAEX3ej9j

- Deploy API: https://salesforce.quip.com/UC22AE1WXy6N

- Deploy Future State Technical Design: https://salesforce.quip.com/bqSSAdlsnIJ8

- Core PaaS Deployer: https://salesforce.quip.com/iAgiAKqxQOid

Other

- Demo: Core Paas Deploy Workflow: https://salesforce.quip.com/EZncA2kSIj7g

Last Updated: 2024-07-01T19:32:00+0000

Developing on DCP

Prerequisites

- awscli:

brew install awscli - kubectl:

brew install kubectl

Connnect to the Dev cluster.

Once you've installed the required pre-requisites, navigate to PCSK and export creds

- https://dashboard.prod.aws.jit.sfdc.sh/

- Request access for your specific environment. IE

deploy-systems-com-dev - Get access approved from the account owners. Navigate to the account owner section.

- Export credentials and paste into your shell.

- Update your kubeconfig:

aws eks update-kubeconfig --name eks-us-west-2 --region us-west-2 - Test and Profit:

kubectl get all

Note if you're not sure of the EKS cluster name you can log into the AWS console from PCSK, navigate to EKS and look at the clusters to determine.

Last Updated: 2024-07-01T19:32:00+0000

Credentials

The following is a list of credentials used by DCP stored in AWS Secrets manager in deploy-systems-com-dev: 287796791794

| Credential | Description | Type | Expires | ARN |

|---|---|---|---|---|

| harbor | Robot user for prodeng-deploy | API Key | N/A | arn:aws:secretsmanager:us-east-1:287796791794:secret:harbor-Z8iL8m |

| prodeng-deploy-mulesoft | github | API Key | N/A | arn:aws:secretsmanager:us-east-1:287796791794:secret:prodeng-deploy-mulesoft-zRjpz2 |

| argo-workflow-db-password | ArgoWorkflow DB | Secret | N/A | arn:aws:secretsmanager:us-west-2:287796791794:secret:argo-workflow-db-password-AUIxbp |

| dcp-db-password | RDS Passwd | Secret | N/A | arn:aws:secretsmanager:us-west-2:287796791794:secret:dcp-db-password-QWYC1r |

Last Updated: 2024-07-01T19:32:00+0000

Configuring Prod access via yubikey

DCP Secrets Management

This guide serves as a resource for secrets management in DCP.

At the time of this writing our secrets are managed in two different ways, Kilonova for bootstrapping and aws-secrets-manager for everything else. This guide will focus on aws-secrets-manager.

Kilonova

Secrets pertaining to bootstrapping the cluster in Janus are managed via kilonova-envs-config.

Documentation for managing SOPS secrets can be found here: getting started with secrets

AWS Secrets Manager

We've choose to use AWS secrets Manager w/ Kubernetes to manage our secrets. This provides us the ability to manage resources declaratively, using IAM Roles and Policies to limit access to specific pods within the EKS cluster.

Using the Kubernetes Secrets Store CSI Driver gives us the ability to mount secrets, keys and certs stored in mounted volumes attached to the containers filesystem. This also gives us the advantage of syncing as Kubernetes secrets from AWS, and natively setting ENV VARs within deployments.

Install the ASCP and Provider

The ASCP driver and Provider are installed as ArgoCD application resources. See deploy-systems-argo-cd as a reference.

Supporting IAM Roles and Policies

In order to give proper access to EKS pods we'll need to define a set of IAM Roles and Policies. The upstream documentation is helpful for different methods of configuration.

The best example of how this configuration works for DCP can be found in this PR: Allow data-access-service to fetch RDS password from secrets manager.

Kubernetes Resources

For EKS pods to be able to authenticate with secrets manager and assume role we need:

- Kubernetes Service Account.

- A secret store resource implementing

SecretProviderClass. - A deployment that mounts the secret store and provides secrets to the POD.

An example PR can be found here: Adding secrets to data-access-service.

Kubernetes Service Account

We need a service account in the same namespace as the pods with a direct annotation to the IAM Role ARN.

There are /three/ key pieces of information here:

- The namespace must be consistent in the service account with the namespace defined within the IAM aws_iam_policy_document.

- The

ServiceAccountname must also match what is defined within the aws_iam_policy_document. - Lastly the Annotation is the key the ties everything together. If the annotation is incorrect the pod will fail to properly mount. The annotation is direct link to the role defined in TF.

apiVersion: v1

kind: ServiceAccount

metadata:

name: data-access-service-secrets

namespace: deploy

annotations:

eks.amazonaws.com/role-arn: "arn:aws:iam::287796791794:role/data-access-service-secrets"

Secret Store Resource

A SecretProviderClass class is required in the same namespace as the EKS pod with a AWS secrets manager object linked directly from the ARN.

Test fetching the mirrored secret with the following:

kubectl get secret dcp-db-password --template='{{.data.rds_password | base64decode}}'

In this example we're also instructing the driver to mirror the secret locally as a kubernets secret with the secretObjects stanza.

apiVersion: secrets-store.csi.x-k8s.io/v1

kind: SecretProviderClass

metadata:

namespace: deploy

name: data-access-service-secrets

spec:

provider: aws

parameters:

objects: |

# This is the ARN to the specific secret you wish to load

- objectName: "arn:aws:secretsmanager:us-west-2:287796791794:secret:dcp-db-password-QWYC1r"

objectType: secretsmanager

objectAlias: dcp-db-password

# This section provdes support for mirroring aws secrets manager as a local kubernets secret

# https://secrets-store-csi-driver.sigs.k8s.io/topics/sync-as-kubernetes-secret.html

secretObjects:

- secretName: dcp-db-password

type: Opaque

data:

- objectName: dcp-db-password

key: rds_password

Deployment

The container configuration is required to provide a ServiceAccountName, Volume with a map back to the secret store, and a volumeMounts.

spec:

serviceAccountName: data-access-service-secrets # This must match the kubernetes `ServiceAccount` name.

volumes:

- name: secrets-store-inline

csi:

driver: secrets-store.csi.k8s.io

readOnly: true

volumeAttributes:

secretProviderClass: "data-access-service-secrets" # This must match the kubernetes `ServiceAccount` name.

...

containers:

env:

- name: "DB_PASSWORD"

valueFrom:

secretKeyRef: # Fetch the password from the the local kubernetes secrets store, mirrored from aws-secrets-mangager

name: "dcp-db-password" # Uses the objectAlias set in the `SecretProviderClass`

key: "rds_password"

...

volumeMounts:

- name: secrets-store-inline

mountPath: "/mnt/secrets-store"

readOnly: true

Validation

IAM Role

We can validate our AWS Roles and policies to ensure we have the proper resources. See below:

$ aws iam get-role --role-name data-access-service-secrets --query Role.AssumeRolePolicyDocument

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "",

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::287796791794:oidc-provider/oidc.eks.us-west-2.amazonaws.com/id/C0EB4AEEDFD18CBBCBD0686222057E0A"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"oidc.eks.us-west-2.amazonaws.com/id/C0EB4AEEDFD18CBBCBD0686222057E0A:aud": "sts.amazonaws.com",

"oidc.eks.us-west-2.amazonaws.com/id/C0EB4AEEDFD18CBBCBD0686222057E0A:sub": "system:serviceaccount:deploy:data-access-service-secrets"

}

}

}

]

}

IAM Policies

$ aws iam list-attached-role-policies --role-name data-access-service-secrets --query 'AttachedPolicies[].PolicyArn' --output text

...

arn:aws:iam::287796791794:policy/data-access-service-secrets-deploy-systems-com-dev

IAM Policies Version

$ aws iam get-policy-version --policy-arn arn:aws:iam::287796791794:policy/data-access-service-secrets-deploy-systems-com-dev --version-id v1

...

{

"PolicyVersion": {

"Document": {

"Statement": [

{

"Action": "secretsmanager:GetSecretValue",

"Effect": "Allow",

"Resource": "arn:aws:secretsmanager:us-west-2:287796791794:secret:dcp-db-password-QWYC1r",

"Sid": "Secrets"

}

],

"Version": "2012-10-17"

},

"VersionId": "v1",

"IsDefaultVersion": true,

"CreateDate": "2023-06-06T16:59:36+00:00"

}

}

Resources:

- Integrating Secrets Manager

- Secrets Provider Class Resource

- Associating IAM Roles with K8s Service Account

Last Updated: 2024-07-01T19:32:00+0000

Deploy new EKS cluster via Janus

This document details the steps to deploy a new EKS cluster via Janus - along with all necessary prerequisites.

Table of contents

- Prerequisites

- tf-k8s-core (manages core resources for a given environment - ie: kdeploy-dev, kdev, etc).

- tf-mulesoft-records (manages top level/root mulesoft DNS records)

- core-paas-provisioner (provisioner environment settings - used by subsequent steps)

- core-paas-clusters (kubernetes cluster settings)

- core-paas-teleport-cli (command line tool used by core-paas-janus to login to the EKS cluster post-creation)

- core-paas-base-image-infra-deploy (used by core-paas deploy jobs)

- kilonova-envs-config (used by core-paas deploy jobs to pick up environment specific settings (secrets, etc) when deploying to your EKS cluster)

- core-paas-janus (EKS cluster creation and deployment)

Prerequisites

tf-k8s-core (manages core resources for a given environment - ie: kdeploy-dev, kdev, etc).

- New Environment: If provisioning the EKS cluster in a new environment, tf-k8s-core must be updated to include the environment. Vault secrets must also exist in path

secret/keys/<env>. The Jenkins job must then be run post-merge in order to provision the resources via Terraform.- examples:

- PR to add deployer arn configs for kdeploy-dev: https://github.com/mulesoft-ops/tf-k8s-core/pull/289

- 🚨 important note: A corresponding freeipa group must also exist for your environment. In this case we were renaming our environment from deploy-systems-com-dev to kdeploy-dev. While “deploy-systems-com-dev“ existed as a freeipa group, ”kdeploy-dev“ did not. This required reaching out to members of the mulesoft security team for group creation.

- PR to add kdeploy-dev environment: https://github.com/mulesoft-ops/tf-k8s-core/pull/288

- The vault secrets in path

secret/keys/kdpeloy-devwere populated by copying over the existingsecret/keys/kdevvalues via the #ms-prodeng-asks thread here: https://salesforce-internal.slack.com/archives/C03UAEK0TNY/p1684435751354739 - Jenkins job that should be run against the corresponding env/region: https://jenkins.build.msap.io/job/DevOps/job/terraform-new/job/tf-k8s-core/job/master/build?delay=0sec

- PR to add deployer arn configs for kdeploy-dev: https://github.com/mulesoft-ops/tf-k8s-core/pull/289

- examples:

- New Region (existing environment): If provisioning the EKS cluster into a new region in an existing environment, update the existing .tfvars file in the profiles directory with any region specific updates if applicable

- examples:

- to add us-east-1 subnet data for the kdeploy-dev environment, you would do so here: https://github.com/mulesoft-ops/tf-k8s-core/blob/master/profiles/kdeploy-dev.tfvars#L13). Make sure the “allowedRegions” variable in the Jenkinsfile includes the corresponding region for your environment here: https://github.com/mulesoft-ops/tf-k8s-core/blob/master/Jenkinsfile#L38

- The Jenkins job will need to be run against the new env/region combo here: https://jenkins.build.msap.io/job/DevOps/job/terraform-new/job/tf-k8s-core/job/master/build?delay=0sec

- examples:

tf-mulesoft-records (manages top level/root mulesoft DNS records)

- When adding an entry for a new domain in the tf-k8s-core step above, tf-k8s-core creates the route53 DNS records for your domain in your account, however the root domain (in this case msap.io) will need to be updated to correctly direct queries for your subdomain:

- example:

- A new domain "kdeploy-dev.msap.io" was added to tf-k8s-core here: https://github.com/mulesoft-ops/tf-k8s-core/blob/master/profiles/kdeploy-dev.tfvars#L1 this created some route53 records in our aws account, however the upstream SOA/NS records still pointed to the root domain msap.io.

- To fix this, we added a new entry to tf-mulesoft-records here: https://github.com/mulesoft-ops/tf-mulesoft-records/pull/116 and ran the corresponding Jenkins job here: https://jenkins.build.msap.io/job/DevOps/job/terraform-new/job/tf-mulesoft-records/job/master/build?delay=0sec

- example:

core-paas-provisioner (provisioner environment settings - used by subsequent steps)

- Required configuration: new environments should be added to the terraform/eks config here: https://github.com/mulesoft/core-paas-provisioner/blob/master/terraform/eks.tf

- example: PR to add kdeploy-dev environment: https://github.com/mulesoft/core-paas-provisioner/pull/686

- 🚨 important note: once the PR has been merged, find the corresponding master build in Jenkins and note the artifact version tag for the newly built provisioner (example.

v5.3.125). This will be used in thehttps://github.com/mulesoft/core-paas-clusters/blob/master/deployments/<environment>.jsonfile in the following core-paas-clusters config

core-paas-clusters (kubernetes cluster settings)

- Required configuration: While the core-paas-janus repo/Jenkins job will create PRs against core-paas-clusters, the following settings must be configured for a new environment:

- Vault secrets in path

secret/aws/<env>. The key/value pairs contained in this vault path will need to be an AWS access key and corresponding secret key with admin access- example: The vault secrets in

secret/aws/kdeploy-devwere populated by having the AWS Access/Secret Key values for our core-paas-clusters IAM user was entered into vault via an #ms-prodeng-asks thread. The following key/value pairs were added:access_key = <AWS access key value>secret_key = <AWS secret key value>

- example: The vault secrets in

- Add deployments file for the new environment (

- example: https://github.com/mulesoft/core-paas-clusters/blob/master/deployments/kdeploy-dev.json

- 🚨 important note: be sure the

provisioner_image_tagvalue is set to a value equal to (or higher) than the version you created in the previous core-paas-provisioner step (example: https://github.com/mulesoft/core-paas-clusters/blob/master/deployments/kdeploy-dev.json#L6)

- 🚨 important note: be sure the

- example: https://github.com/mulesoft/core-paas-clusters/blob/master/deployments/kdeploy-dev.json

- Add the new environment to the repo’s Jenkinsfile

- Vault secrets in path

core-paas-teleport-cli (command line tool used by core-paas-janus to login to the EKS cluster post-creation)

- Required configuration: Add your new environment to the cmd/accounts.go file

- example PR adding kdeploy-dev: https://github.com/mulesoft/core-paas-teleport-cli/pull/95

core-paas-base-image-infra-deploy (used by core-paas deploy jobs)

- Required configuration: Based on the new core-paas-teleport-cli version built in the previous step, update the Dockerfile in this repo to pull your new image/artifact

- example PR updating the tele cli version: https://github.com/mulesoft/core-paas-base-image-infra-deploy/pull/292/files

kilonova-envs-config (used by core-paas deploy jobs to pick up environment specific settings (secrets, etc) when deploying to your EKS cluster)

Note: this is optional for the EKS cluster creation itself as “deploy” can be unchecked in the core-paas-janus jenkins job which will leave out any deploy steps (more notes on core-paas deploys are including in the core-paas-janus step below)

- Required configuration for secrets: As noted in the README, each environment needs a

.sops.yamlfile which contains the ARN for a kms key which will encrypt/decrypt your secret values. The KMS key for your env/region will be named “core-paas” and is created via first step in this guide (tf-k8s-core)- example PR adding the required

.sops.yamlconfig along with several other config/secrets files for the kdeploy-dev environment: https://github.com/mulesoft/kilonova-envs-config/pull/24858

- example PR adding the required

core-paas-janus (EKS cluster creation and deployment)

⚠️ 🚧 ⚠️ Note: Known workaround currently required for “deploy” into new environments. Details provided below ⚠️ 🚧 ⚠️

- New Environment: The Jenkinsfile in the core-paas-janus repo must be updated to include the environment. A Jenkins credential must be created here https://jenkins.build.msap.io/job/core-paas/job/components/job/core-paas-janus/credentials/ named TELE_KEY_JENKINS_

(example: TELE_KEY_JENKINS_KDEPLOY-DEV). The value for this credential can be set to an empty string. The Jenkins job must then be run with deploy unchecked (see note in the examples section below) post-merge in order to provision your EKS cluster. - examples:

- PR to add kdeploy-dev environment: https://github.com/mulesoft/core-paas-janus/pull/475/files

- The Jenkins credential TELE_KEY_JENKINS_KDEPLOY-DEV (with value set to an empty string) was created via the #ms-prodeng-asks thread here: https://salesforce-internal.slack.com/archives/C03UAEK0TNY/p1684254109468809

- Jenkins job that should be run against the corresponding env/region: https://jenkins.build.msap.io/job/core-paas/job/components/job/core-paas-janus/job/master/build?delay=0sec

- parameter settings for the Jenkins job

- TARGET_ENV: your environment (eg. kdeploy-dev)

- TARGET_REGION: AWS region (eg. us-west-2)

- ACTION: (default set to “apply“ - to create the cluster)

- CLUSTER_NAME: (default set to “eks”, the region will be auto-appended during the Janus job run)

- DEPLOY: ⚠️ 🚧 ⚠️ uncheck ⚠️ 🚧 ⚠️ (default is checked)

- note: there is currently a known issue that prevents core-paas deployments to new environments. The EKS cluster will still be created with this unchecked. A core-paas deploy can then be initiated separately using this job: https://jenkins.build.msap.io/job/core-paas/job/deploy/view/change-requests/job/PR-2659/build?delay=0sec

- example parameters: https://jenkins.build.msap.io/job/core-paas/job/deploy/view/change-requests/job/PR-2659/9/parameters/

- more info on this deploy workaround:

- slack thread: https://salesforce-internal.slack.com/archives/C03HW1X2C0G/p1684773337317309?thread_ts=1684511097.251879&cid=C03HW1X2C0G

- Team dependency: https://gus.lightning.force.com/lightning/r/ADM_Team_Dependency__c/a0nEE000000AR3lYAG/view

- related GUS Work item: https://gus.lightning.force.com/lightning/r/ADM_Work__c/a07EE00001Qjy2LYAR/view

- note: there is currently a known issue that prevents core-paas deployments to new environments. The EKS cluster will still be created with this unchecked. A core-paas deploy can then be initiated separately using this job: https://jenkins.build.msap.io/job/core-paas/job/deploy/view/change-requests/job/PR-2659/build?delay=0sec

- KUBERNETES_DISTRO: (leave default “eks” setting)

- MULESOFT_CLOUD: commercial or gov

- MULESOFT_CLUSTER_TYPE: *change to deploy for our teams clusters

- DRY_RUN: uncheck to create the cluster, leave checked for a dry run

- remaining parameters can use the default blank values at this time (no change case value is required until we build clusters in higher envs)

- parameter settings for the Jenkins job

- examples:

- New Region (existing environment): If provisioning the EKS cluster into a new region in an existing environment, simply make sure your region is already accounted for in the Jenkinsfile and run the Jenkins job with the “TARGET_REGION” parameter set to your region of choice. No new credential or changes from the “New environment” steps are required.

Optional prerequisites (for core-paas app deployments)

-

tf-core-paas-heartbeat (provisions the IAM role required by the core-paas hearbeat app)

- example

- PR adding a new environment: https://github.com/mulesoft-ops/tf-core-paas-heartbeat/pull/5

- Then run the associated Jenkins job against your environment/region to add the role here: https://jenkins.build.msap.io/job/DevOps/job/terraform-new/job/tf-core-paas-heartbeat/job/master/build?delay=0sec

- example

-

Also see Deploying Core Pass Apps for initial core paas app deployment docs

Last Updated: 2024-07-01T19:32:00+0000

Core Paas app deployment

⚠️ 🚧 ⚠️ Important Note

There is currently a known issue that prevents core-paas deployments to new environments from master branch. A core-paas deploy can be initiated using this particular Jenkins job: https://jenkins.build.msap.io/job/core-paas/job/deploy/view/change-requests/job/PR-2659/build?delay=0sec

- more info on this deploy workaround:

- slack thread: https://salesforce-internal.slack.com/archives/C03HW1X2C0G/p1684773337317309?thread_ts=1684511097.251879&cid=C03HW1X2C0G

- Team dependency: https://gus.lightning.force.com/lightning/r/ADM_Team_Dependency__c/a0nEE000000AR3lYAG/view

- related GUS Work item: https://gus.lightning.force.com/lightning/r/ADM_Work__c/a07EE00001Qjy2LYAR/view

⚠️ 🚧 ⚠️

core-paas apps installed on our kdeploy-dev EKS cluster

- https://salesforce.quip.com/dtytA509hJlM#temp:C:GSdbf3739f78f1c4e7db56e0110b (includes associated GUS work items)

- Kilonova Envs config

- The following Kilonova Envs config configs/settings were added to faciliate deployment of the core-paas apps

- Top level kdeploy-dev values.yaml: https://github.com/mulesoft/kilonova-envs-config/blob/master/kdeploy-dev/values.yaml

- Top level kdeploy-dev secrets.yaml: https://github.com/mulesoft/kilonova-envs-config/blob/master/kdeploy-dev/secrets.yaml

- values and secrets yaml files in the kdeploy-dev/core-paas subfolder (and in each of the subfolders within kdeploy-dev/core-paas): https://github.com/mulesoft/kilonova-envs-config/tree/master/kdeploy-dev/core-paas

- The following Kilonova Envs config configs/settings were added to faciliate deployment of the core-paas apps

- App deployment

- As each core-paas app was configured within kilonova envs config, it was subsequently deployed via the Jenkins job listed at the top of this document (https://jenkins.build.msap.io/job/core-paas/job/deploy/view/change-requests/job/PR-2659/build?delay=0sec ). An example of the paramters set can be seen here (in this case the core-paas heartbeat app): https://jenkins.build.msap.io/job/core-paas/job/deploy/view/change-requests/job/PR-2659/83/parameters/

Last Updated: 2024-07-01T19:32:00+0000

Terraform

Last Updated: 2024-07-01T19:32:00+0000

Onboarding to Terraform Pipeline

1. Create a tf-<product>-<component> repository in the MuleSoft-Ops Organization

This repository is meant to host all of your Terraform (.tf) files and should be organized according to the latest Terraform standards.

There are plenty of example repositories to look at in MuleSoft-Ops to show you how to organize your variables, profiles, modules, dependencies, etc.

You can request a new repository from any channel in Slack using this ProdEng bot slash command:

/prodeng create-repo

2. Setup your Terraform resources

Once you have your repository, you will need to add the basics to get started with Terraform.

The basics are:

main.tfvariables.tf

Main

The main.tf is merely an example of a basic Terraform file. You can provide any and all resources there, or you can organize them by any other logical grouping of separate .tf files

It can often be helpful to group your Terraform code by infrastructure type, AWS service configurations, etc.

Example: If you have IAM resources, you can group them all in an iam.tf or if you have RDS databases, place the necessary configurations in rds.tf

The Terraform pipeline will not be prescriptive of how you must organize your files.

Variables

The variables.tf file will be necessary as you configure more resources. Eventually, you will need to provide data that is used throughout the repository or even supplied from another module.

If you're just getting started, the basic variables you will need can be seen in the next section because you will want to use variables to provide data for the tags module.

3. Tag your Terraform resources

This is a requirement for all of our AWS resources, and therefore is a critical part of onboarding your Terraform through the MuleSoft Terraform pipeline.

There is a module that you should take advantage of to get started, and then you may add any other necessary tags by merging them with the tags module.

Include the tags module

In your modules.tf file, add the tags module

module "tags" {

source = "git::git@github.com:mulesoft-ops/tf-tags-module.git?ref=v2.0.0"

product_tag = "${var.product_tag}"

component_tag = "${var.component_tag}"

asset_tag = "${var.asset_tag}"

u_gus_team_id = "<id>" # your team's ID

u_customer_data = "None"

p_confidentiality = "Internal"

u_service_tier = "<service-tier>"

u_scan_eligibility = "Not Applicable"

}

Add any other tags you need

If you have other tags you would like to include alongside the required tags, you can modify them in the modules.tf

locals {

legacy_devops_tags = {

ENV = var.env

OWNER = var.owner

ROLE = var.role

REPO = "https://github.com/mulesoft-ops/tf-muleteer"

Terraform = "true",

}

common_tags = merge(module.tags.tags, local.legacy_devops_tags)

}

Or you can add them to individual resources in your .tf files

resource "<type>" "<name>" {

some_key = some_value

# here we are merging our tags 'Name' and 'ENV' with

# the existing tags from the tags module

tags = merge(var.tags, tomap({"Name" = "${var.name} "ENV" = "${var.environment}"}))

}

4. Add a Jenkinsfile to your terraform repository

Finally, once your Terraform is setup, you will need a Jenkinsfile in your Terraform repository which will be discoverable by the terraform-new job in Jenkins.

You can use this Jenkinsfile as an example

Adjust your available regions according to your needs

switch (env.JENKINS_URL) {

case devJenkins:

supportedEnvs = ['kdev']

supportedRegions = ['us-west-2']

break

case buildJenkins:

supportedEnvs = ['kstg', 'kprod']

supportedRegions = ['us-west-2']

break

case govJenkins:

supportedEnvs = []

supportedRegions = []

automaticEnvs = []

automaticRegions = []

break

default:

error "unknown jenkins url ${env.JENKINS_URL}"

break

}

NOTE: you may be concerned that the Jenkinsfile does not have a plan option. Our Terraform job runs a plan and then asks for input before running the apply. See the

5. Kick off the Terraform job

After you add your Jenkinsfile, you should be able to start a build in Jenkins using the terraform-new job.

https://jenkins.build.msap.io/job/DevOps/job/terraform-new/

The job will run a terraform plan and then ask for your input to approve the plan. Once you approve, the job will run the actual Terraform apply command.

Last Updated: 2024-07-01T19:32:00+0000

Deploy Control Plane

Last Updated: 2024-07-01T19:32:00+0000

Onboarding to DCP

Understanding the Service

DCP is Mulesoft's dedicated deployment service, built to integrate with the build system and improve our confidence, resilience, and performance in delivering services onto the CorePaaS platform.

Components

Data Access Service

The Go service which acts as a programmatic interface and orchestrator for the deployment pipeline.

DCP UI

The React frontend of the DCP service. The UI uses Next.js as the base framework for developing and reusing React components, delivering assets, etc.

Preparing Your Service for Onboarding

- Is your service on CorePaaS already or at least CorePaaS compatible?

- Have you configured your service with Kilonova/Valkyr (

kilonova.yaml,valkyr.yaml)? - Do you rely on continuous delivery to deploy changes?

Verify Your Team Details

-

Navigate to the DCP UI

-

Select the "Teams" tab

-

Search for your team

-

Confirm your team details at the top are correct

ℹ️ If you need to modify your Team's information, you can edit the details in the Muleteer repository.

Onboarding Your Service for Automated Deployments

- In a new tab, navigate to your service repository on GitHub.

- Create or update your kilonova.yaml file with the

deploymentMethodkey.

deployment:

method: dcp

You may refer to this configuration as an example.

This branch used to add this configuration to your service—and any branch thereafter that contains this configuration—may be used to trigger a deploy. This automated behavior will be subject to DCP branch patterns.

🧐 Consult this article to learn more about automated deployments in DCP.

📚 Related Topics

Quick Start

If you would prefer a quick start tutorial to become familiar with DCP, see this article on how to Create a Manual Deployment

How DCP Works

If you want to dive into the "nuts and bolts" of the system and its various components further, view the How DCP Works article.

Troubleshooting

If you come across issues, the first place to stop would be our Troubleshooting guide.

Last Updated: 2024-07-01T19:32:00+0000

DCP Slack notifications

DCP has the ability to send Slack notifications for a Deployment.

Notifications are available for the following states:

- Deployment

Started - Deployment

Failed - Deployment

Completed - Deployment

Approved - Deployment

Cancelled

Configure notifications

Teams can choose to configure a specific Slack channel to receive notifications. By default, notifications will be sent to ms-$PRODUCT-bot if not set. See MCN for more details on Product.

- To enable a specific Slack channel configure your Muleteer product.yaml with a

slackBotChannelkey/value pair. See idp.yaml for an example.

For more information on how Slack notifications work in DCP refer to the: Events Publisher Client.

Notification Detail

Notifications are comprised of the following:

DeploymentSet.Url: This is the URL linking back to the DCP UI.EventType.Name: This is the state the deployment is in. IE:Started,Failed, etc.Service.Name: Name of the service.DeploymentSet.Version: Version of the deployment.CurrentPhase.Environment: The environment of the deployment.CurrentPhase.StartedAt: The StartedAt time of the deployment.

Last Updated: 2024-07-01T19:32:00+0000

Deploy UI

DCP User Guide

Document Purpose: This document describes how to use the Deploy Control Plane (DCP) for MuleSoft Service Teams.

- Glossary

- How Automated Deployments work

- Failure handling

- Cancelling a deployment

- GOV processing for deployments

- Deployment state lifecycles

- TODO: Deployment Failure Troubleshooting Tips

- DCP Events

- Supporting Change Freezes

- API Swagger locations

- Deployment Events

- DCP App

- Find your team and service

- Find deployments

- Create a manual deployment

- Cloning a Deployment

- Configuring Slack notifications

- Correcting Team or Service information via Muleteer and Github

Glossary

|Term |Description | |--- |--- | |Deployment |describes a series of phases by environment. | |Phase |a series of releases to cluster targets for a single environment | |Phase Prerequisite |items that must be completed before a phase can begin like a GUS change case approval or an approval for continuous-delivery prior to deploying to Production | |Release |a Helm deployment by CPC | |CPC |Core-Paas Client - used to deploy to our clusters | |Deploy Strategy |describes a progression of environments that an artifact manifest should be deployed to. | | | | | | |

How Automated Deployments Work

DCP will automatically deploy an artifact manifest to the cluster targets in each environment. DCP deployments are triggered by a BuildCompleted event published from the build tooling. DCP uses a database to manage deployments and their state.

-

DCP receives the BuildCompleted event, which includes artifact manifest, MCN component name, channel, deployment strategy attributes and more.

-

DCP creates a Deployment using details from the event payload.

- Note: DCP maintains a table of deployment targets (clusters by environments, etc) imported from channels.yaml.

- DCP finds the environments to include in this deployment and creates ordered Phases for each environment. DCP queries the targets table by deployment strategy and channel.

- Each Phase finds a list of targets for this phase’s environment.

- if this phase will go to GOV, we add GOV phases to the deployment. See 'GOV Processing for Deployments' below for more.

-

Once a deployment is created, we then wait for the artifact's security scanning to complete. To do this, DCP initiates an Argo workflow that polls the artifact API every minute for scan status. When we detect the artifct is scanned, the worflow calls our DCP API to set the status to Scanned. This workflow does not time out, but will be cancelled ifß you cancel a deployment. See the Scanning/Scanned workflow states at Deployment States

-

Once a deployment is scanned, we initiate the deployment’s first Phase.

-

Depending on the environment’s configuration, a phase may require one or more Checks. If a check fails, the Phase is immediately cancelled. See Phase Checks

-

Depending on the environment’s configuration, a phase may require a Change Case. If a Change Case is required, we will create a GUS change case in your team’s queue for you. See “Phase pre-requisites” below. See Phase Approval

-

As we work though the Deployment, we send each Phase to an Argo workflow which concurrently calls CPC on each Release’s targets. Workflow notifies DCP as it progresses through each Phase and Release. Users can monitor the deployment progress on the DCP UI.

-

Phase Pre-requisites: an environment can be configured with a variety of pre-requisites that require approval by humans, GUS cases, and more. See Phase Approval

-

Once all Phases have been deployed, the Deployment is marked as Completed. If any of the Phases fail, the Deployment will be marked as Failed. If any of the Phases are cancelled, the Deployment will be marked as Cancelled. See the affected Phase's statusDetail field for more info. See Troubleshooting for more info.

GOV Processing for Deployments

DCP also deploys to GOV environments if the service's isGov attribute is true. We read the isGov attribute from the kilonova.yaml file in the service's repo. In the step described above where DCP queries the deployment targets table, DCP will appended GOV phases to the Deployment. Gov phases will create a separate change case from the Commercial Production change case and service teams will need to review the change case that is auto-created. Update the GUS change type, change risk, set the deploy window, and submit the GUS case for approvals. You will be notified via standard GUS notifications. Once the change case is in “scheduled-approved” status, the Phase will evaluate the case for approvals, pause for subsequent approvals and then begin deploying. If the GUS approval is rejected, or if the timeout of 14 hours is exceeded, the Phase will be automatically cancelled.

Failure Handling

When any Release fails, the workflow engine will notify DCP of the failure, including details about the error. A Release failure will quickly bubble up to a Phase failure and then a Deployment failure. A DeploymentPhaseFailed event is raised to the event bus and a slack notification is sent to the team (if their slack channel is configured). Users are linked to the DCP UI to view details for the error and enable troubleshooting. See Troubleshooting for more info.

Cancelling a Deployment

A user may wish to cancel a deployment for a variety of reasons. Perhaps the deployment has become “stuck“ and does not seem to be progressing for a long period of time or the user discovers that the deployment was mistakenly triggered. When a deployment is cancelled, the current Phase notifies the workflow to stop its processing and DCP does not progress to the next phase.

To cancel a deployment, open the DCP app and find your deployment in the list. Alternatively you may click the deployment link inside the Slack notifications your team was sent. Once found, click the Cancel button next to the deployment. (insert picture)

Supporting Change Freezes

When a change freeze is in place, GUS cases cannot be actioned to “scheduled-approved”. As DCP relies on this state, deployments to Prod are blocked until the freeze is lifted.

Authentication and Authorization

TBD

Configuring Slack notifications

Slack channel and workspace are imported from Github.

More topics

Last Updated: 2024-07-01T19:32:00+0000

Authentication and Authorization

Overview

The DCP UI is secured with Quantum-K (keycloak). QK is a forked version of Keycloak, so this doc will refer to it as Keycloak.

DCP UI is a next.js and Node.js React application written in the Next.JS framework. the UI uses the next-auth.js library to handle the SSO flow with Keycloak.

As a OpenId application, Keycloak clients must be configured for each callback url. So Keycloak will have a dev version, stage and production.

User flow

- user visits DCP UI and must login to use any features.

- user clicks login in top nav, is redirected to Keycloak UI.

- user logs into Keycloak, upon success, is redirected back to DCP UI and a JSON Web Token (JWT) and ID token is returned. A session is created with these tokens.

- the Auth JWT contains a series of claims that correspond to Entitlements. We will use DCP Admin and SRE entitlements to drive admin groups in DCP.

- When using DCP, all features are read-only unless you are a member of a team that owns a service. When you are in this team, you may create a manual deployment and approve human workflows.

- To determine if you are in the service's team, we look up the GUS team ID that is linked to your service, get the team memmbership and check that your email address (from the JWT) is in the membership list.

- Note that the JWT has an expiration date. The DCP-UI knows how to renew this token when it expires.

Securing Authorization calls

- the "is user in team" call must be secured from tampering by browser tools. So we include the JWT in the header of each call to the DAS (DCP API).

- The DAS will unpack this JWT, validate that it has not been tampered with, and use the user's email to check that the user is in the team.

- If not in the team, a 401 response is returned

DAS API Security

Note: DAS means: Data Access Service. The API is secured differently from the UI. The API authenticates 'clients', not users. Each client must authenticate with a client certificate.

DAS Client flow

-

Client calls the DAS and includes the client certificate in the header

-

API checks that the certificate is trusted and gets the client ID from the certificate.

-

API stores a list of routes and methods that are valid for each client ID. API checks that the client ID is authorized to complete each call by comparing current HTTP verb (method) and route to the list.

-

If not successful, a 401 response is returned.

-

Example code

These values are base-64 encoded. req.headers.set('X-Client-Cert', client_cert) req.headers.set('UserAuthToken', token)

To replay these requests, you can get the cert and user auth token values from the browser's network trace.

Last Updated: 2024-07-01T19:32:00+0000

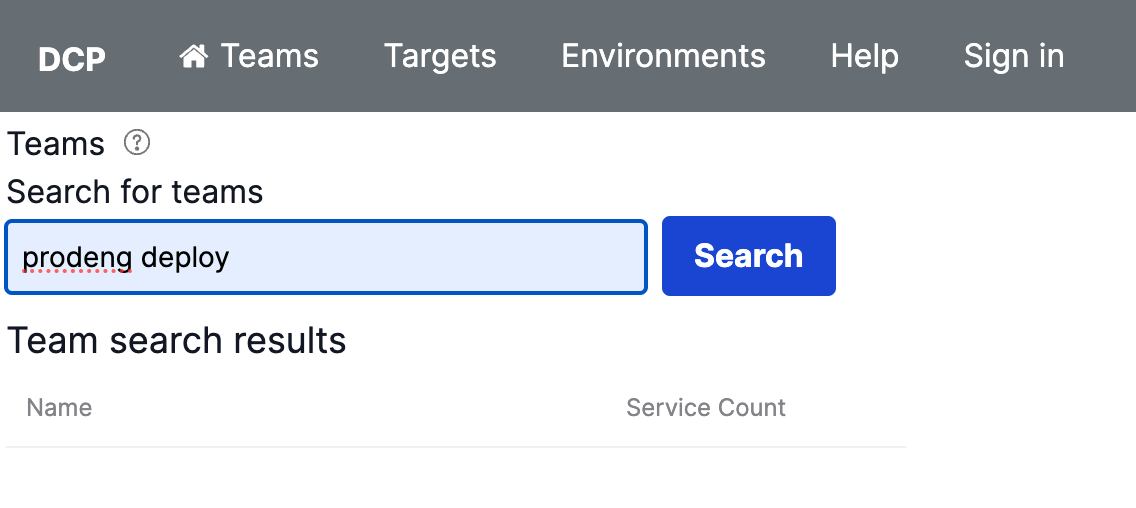

Searching for Teams

To find a team in DCP, enter your criteria the search field and click the search button. You must enter at least two characters. The search will return results where the team name contains the search criteria.

Searching for 'mule' will return all Teams that have those letters in their team name.

Last Updated: 2024-07-01T19:32:00+0000

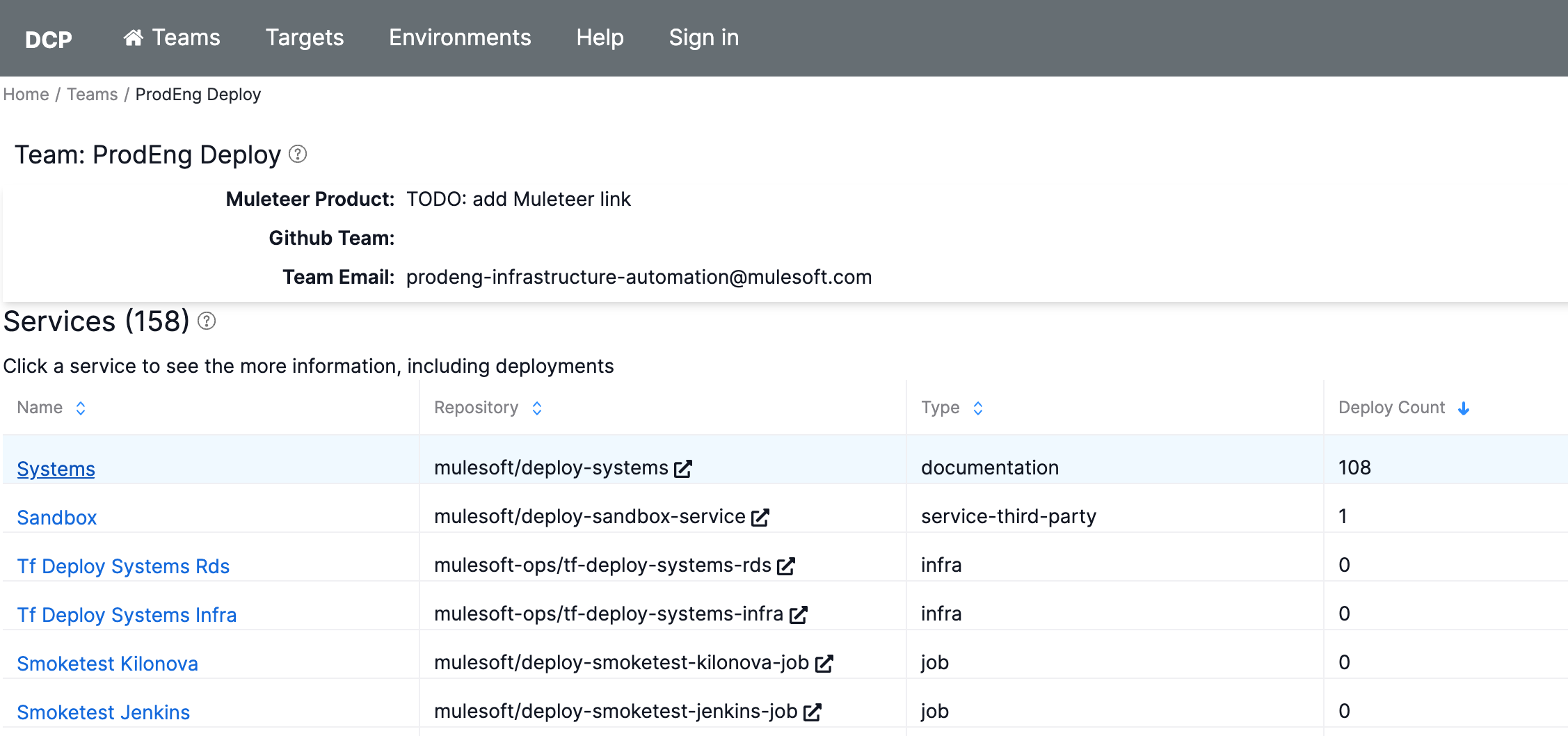

Viewing Team Details

On this screen you will find the team details and a list of services for this team.

Team Details

- Muleteer product: this is a link to the Muleteer product.

- Github Team: this is a link to the team in Github, when available.

- Team email: the email address for the team, as pulled from Muleteer or Product.yaml.

Service List

This is a list of services for a team.

- The column headings are sortable.

- Columns include:

- The first column is a link to service details.

- The Git repository, the service type

- a count of deployments, since this may indicate a more frequently used service.

Last Updated: 2024-07-01T19:32:00+0000

Service Detail Page

This page provides details about the service.

- Type:

- Github Repo: the Github repository for this service. Imported from Muleteer

- Rollback Strategy: TODO: link to existing documentation on rollback strategy

- Slack Workspace: this is usually defaulted to: salesforce-internal

- Slack Channel: the channel that deployment notifications are sent to. Imported from kilonova.yaml

- Runtime Dependencies: (for a future feature)

On this page you can also:

Last Updated: 2024-07-01T19:32:00+0000

Service Deployability

Tip: for this document, Service is synonymous with Component

You may have seen this warning on the DCP services list

What Makes a Service Deployable?

The answer is very simple. For every Unified Pipeline component, we have a defined componentType. Each service type is recognized as "deployable" or "non-deployable", which is roughly established according to this chart.

How Do I Make a Non-Deployable Service Deployable?

The componentType configuration is stored in Muleteer. Here is an example configuration of a service with a deployable type (service).

- githubRepository: mulesoft/deploy-systems

productionStatus: nonProduction

componentType: service

In order to change your component to a deployable componentType, go to Muleteer's repository, identify your product and component, and modify your component's configuration to be a deployable type.

➡️ The deployable types for DCP are listed here.

Last Updated: 2024-07-01T19:32:00+0000

Deployment Detail Page

About Deployment

Deployments are created automatically when a BuildCompleted event occurs in the Event Bus. In the event, we receive the Deployment Strategy and the Cloud variables. DCP then queries our Deployment Targets table to select a list of environments to create phases for and list of cluster targets to release to.

The model is this:

- Deployment

- Phase(s) - per environment

- Release(s) - per cluster

- Phase(s) - per environment

This feature lists details of the deployment.

- Deployment name. This is simply a friendly name to refer to a deployment by. A guid is a mouthful.

- Service: the service name that owns these deployments.

- Status: overall status of the deployment. See Deployment Statuses.

- Started: time when the deployment started.

- Started: time when the deployment stopped.

- Version: the artifact version as tagged in Github. This is a link to the Github tag.

Below the deployment details, is a list of phases defined for the deployment. The phase list shows:

- The phase name and the current status, for at-a-glance use.

- Approve and Reject buttons, if human approval is required.

- Cancel button - to cancel a specific phase. Cancelling a phase will also cancel the parent deployment.

Clicking on a phase, you then see the Phase Details and the Release List for the selected phase.

Phase details are:

- Phase ID: the internal identifier for the phase. Helpful to have this for debugging with logs and MonC. A helpful button lets you copy the ID to your clipboard.

- Status: the phase status. See Phase Statuses

- Started: time when the phase started.

- Started: time when the phase stopped.

- GUS Case ID: this links to the GUS case created, if a GUS case is required for approval.

- Created: when the phase was created.

- Updated: when the phase was updated.

- Status Detail: in some scenarios, such as a cancellation or a failure, DCP will store additional details in the Status Detail. This field is hidden if empty.

- Environment Rules and Approvals: this lists the pre-requisites for phases into the given environment and any approvals for those pre-requisites.

- learn more at About Phases

The release list feature lists the release for a phase:

- Name: this is the name of the cluster.

- Status: this is the deployment status of the release. See Release Statuses

- Started: time when the release started.

- Started: time when the release completed.

- Created: when the release was created.

- Updated: when the release was updated.

Last Updated: 2024-07-01T19:32:00+0000

Creating A Manual Deployment

Why would I want to do this?

There are several use cases for manual deployments, including:

- Creating a deploy that targets a specific environment

- Testing out DCP when onboarding a new team

- Redeploying a specific version to mitigate an issue

What Are Manual Deployments?

Manual deployments function similar to automatic deployments, but are triggered by humans as a one-off.

Manual Deployment Dependencies

Artifact Version: A manual deployment requires an existing artifact that has been vulnerability scanned in the last 30 days. The dropdown version list only shows artifacts that meet this criteria. If needed, create a PR against this repo to trigger a new build event and wait for vulnerability scanning to occur in Harbor.

Deployment Strategy: The deployment strategy is used in two ways. First, the strategy tells us the last environment to deploy to when the branch is main/master. If your deploy strategy is "development", we will not deploy past the dev environments, even if your branch strategy or manual deployment request us to target upper environments. Second, as your service becomes ready for Production, you will move your Deploy Strategy to "continuous delivery" or "continuous deployment". When the strategy is set to "continuous delivery", we will pause your deployment at the Production environment and require human approval to continue.

DCP reads your deployment strategy from product.yaml. If there is an error reading this file, we will display the error and prevent deployments until the value is set. Please update your product.yaml and wait up to one hour for the deployment strategy to be updated in the DCP database.

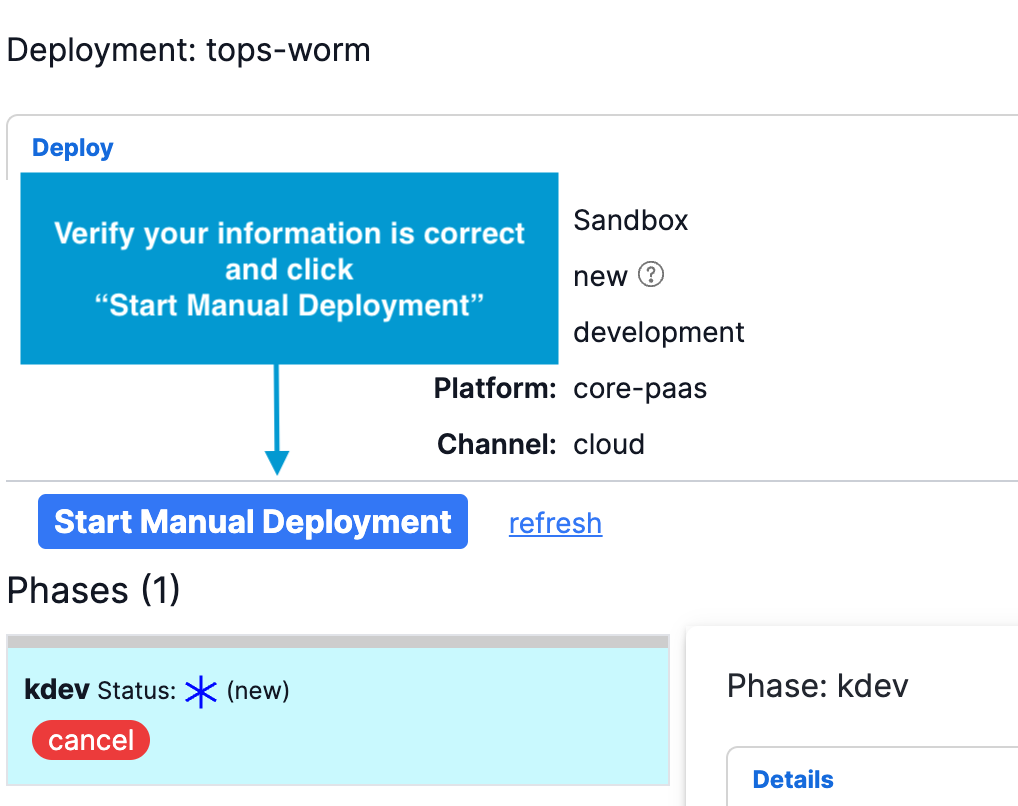

Tutorial

If you desire to "get your hands dirty" with DCP, we've got you covered. This guide will show you how to trigger a manual deployment of your service.

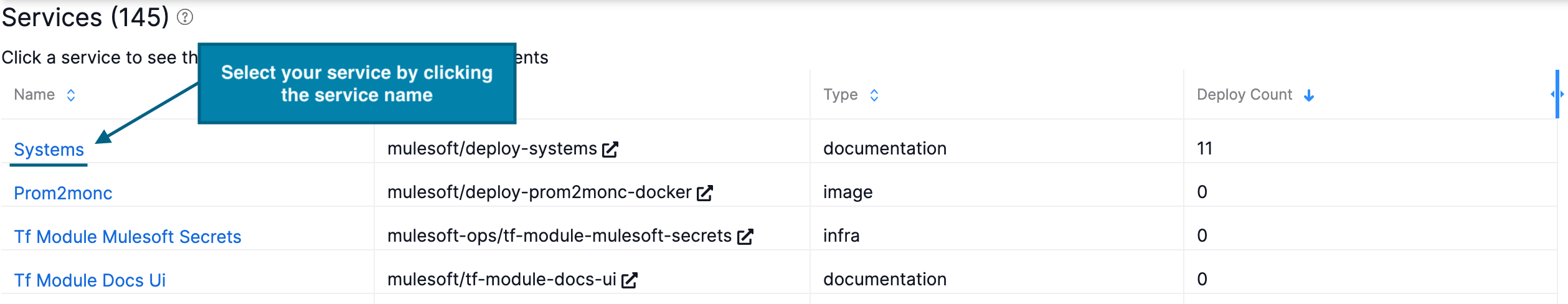

-

Navigate to your Team's page, just as you did when you onboarded to DCP to verify your team's information.

-

Scroll down to find the Service you would like to deploy. You can sort the rows by selecting the column name in the table header.

-

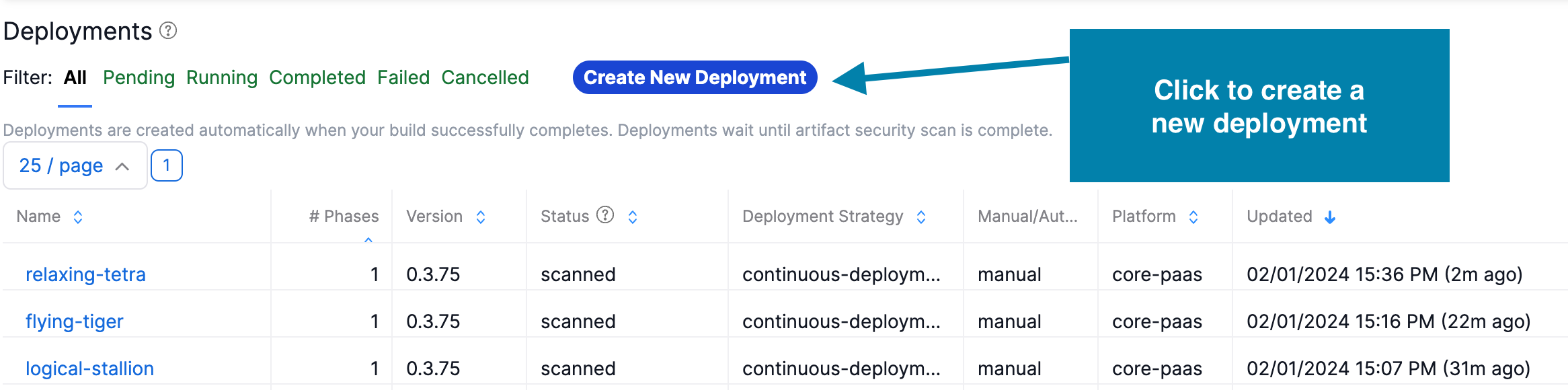

Scroll to the bottom of your service page and under Deployments click to Create New Deployment

-

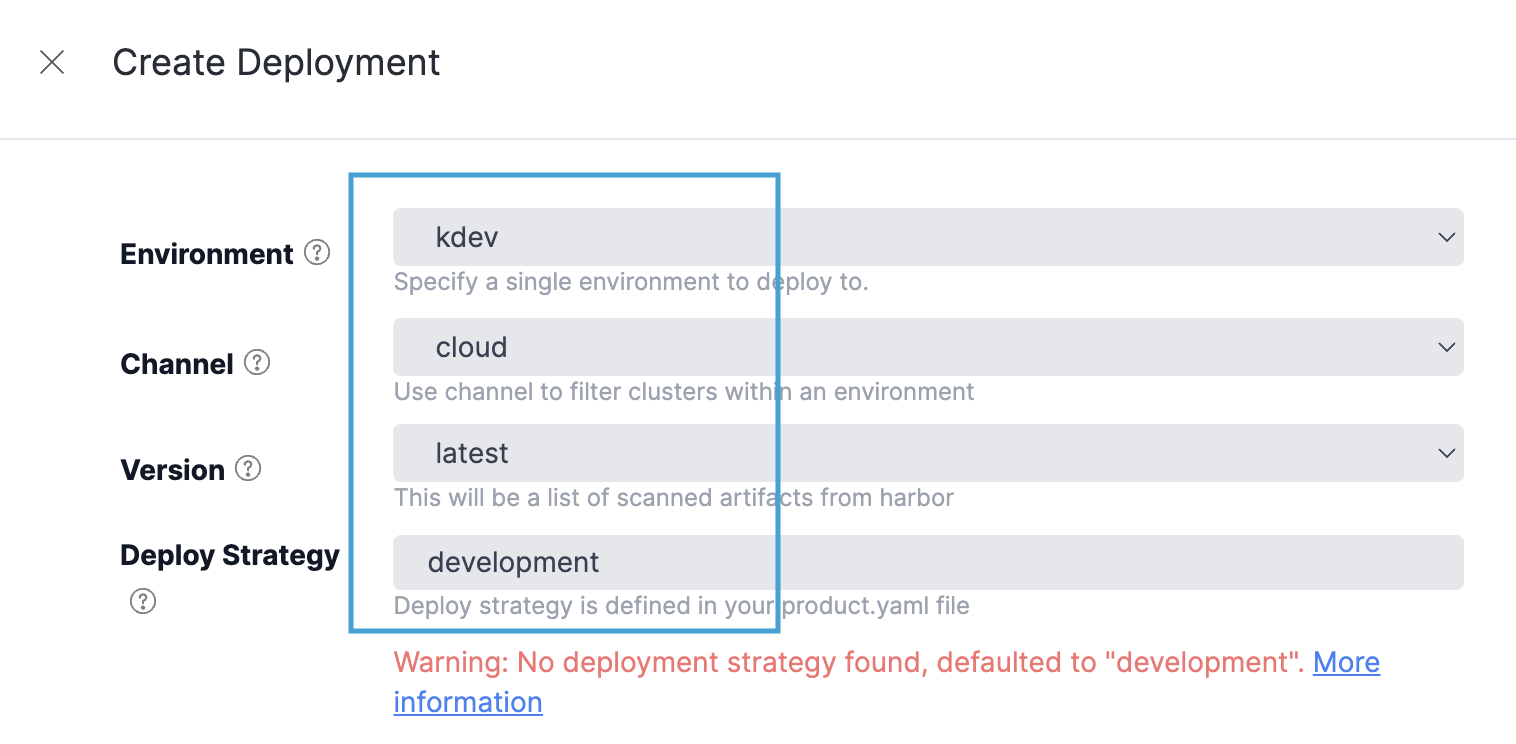

Fill out the form according to the following values:

- Environment: Select your target CorePaaS environment

- Channel: Select the channel that your services reside in.

- Version:

latestwill be the most recent version from Harbor. :star: Tip: If your list is out-of-date, close and re-open the panel to refresh the data. - Deploy Strategy: Only necessary if you desire to override your current strategy as defined in the

kilonova.yamlfor your service.

- Once a manual deployment is created, it will stay in a "new" state until it is started. Click the 'start deployment' button to start a manual deployment. This feature does not apply to automatic deployments, triggered from events. Automatic deloyments are immediately started.

Related Topics

Last Updated: 2024-07-01T19:32:00+0000

Branching Strategy for Deployments

Overview When an automatic deployment occurs, DCP receives a built event and must deploy the given artifact to the proper environment. Branch strategies help DCP know which environments to target based solely on the Git branch naming convention.

Use Cases

- As a user, I want to define the environments that my branch is deployed to so that my dev, stage, prod, etc branches are deployed where they are expected.

- As a user, I want my services to have an initial standard branch pattern-to-env configuration, so my services can be deployed “out of the box”

Teams are encouraged to define branch prefixes aligning with their development and release workflows. For instance, a team working on a refactor may use a branch prefix like "dev/container-refactor" if they would like their change pushed out to test environments. This flexibility in branch naming conventions accommodates project-specific needs.

Default Branch Strategies

All services will receive a set of default branch strategies that should meet the needs of most services. When custom branch strategies are required, contact the DCP Support team.

When the built event arrives, DCP will compare the branch name against each branch strategy in the order they are displayed on the Service Detail page.

View Branch Strategies

On a given service, you will see the list of branch strategies

Manage Branch Strategies

In a future release, DCP will support service owners ability to insert, update and delete their own branch strategies. Please note that images are created in Harbor based on branch patterns, so it is possible that you can create a branch pattern that may build, but does not create imgages, and therefore cannot be deployed by DCP.

Troubleshoting

- No branch patterns: When no branch strategies exist, the deployment is immediately cancelled.

- No matching branch strategies - when no strategies are matched to the branch name, the deployment is immediately cancelled.

- An phase for an environment does not have any releases - when no clusters are found for a given phase/environment, we will cancel the entire deployment so that you can correct the channel or other deployment parameters

In DCP you can

- Custom Branch Prefixes: Teams define prefixes in line with their development strategies.

- Deployment Destination Selection: Flexibility in specifying target environments.

- Detailed Deployment Insights: Access to deployment status, environment details, and timestamps.

- Deployment Triggers: Teams initiate deployments directly from the portal.

- Documentation Integration: Links to onboarding resources for branching strategies and best practices.

Transitioning to Branch Strategies

Teams currently using Deployment Strategies will transition to the new strategy. Existing DeploymentStrategies and corresponding branch patterns and target environments are as follows:

- Development Strategy:

- Branch Pattern: "main"

- Target Environments: [Dev, QA]

- Continuous Delivery Strategy:

- Branch Pattern: "main" or "master"

- Target Environments: [Dev, QA, Staging] (will stop at staging and require manual deploy to prod).

- Continuous Deployment Strategy:

- Branch Pattern: "main" or "master"

- Target Environments: [Dev, QA, Staging, Prod, Prod-EU]

Example Branch Prefixes and Target Environments

Example branch prefixes for channel cloud and their resulting target environments:

| Branch Prefix | Target Environments |

|---|---|

| dev/ | Dev |

| qa/ | Dev, QA |

| stg/ | Dev, QA, Staging |

| hotfix/ | Staging (for evidence), Prod, Prod-EU |

main master | Dev, QA, Staging (human approve if Continuous Delivery), Prod, Prod-EU, (gov phases) |

## Questions

1. What use is Deployment Strategy after we complete this feature?

- we can use this for phase querying when branch is main/master

4. Can a user delete all their branch patterns?

- No, we will provide a warning. When no branch patterns match, this will cause automatic deployments to be immediately cancelled.

5. How does branch pattern design affect manual deployments.

- Does not apply. Manual deployments specify artifact and user can pick Environments.

---

<div>

<strong>Version: 0.3.110</strong><br>

Last Updated: 2024-07-01T19:32:00+0000

</div>

DCP Branch Patterns

Last Updated: 2024-07-01T19:32:00+0000

Cancelling a Deployment

DCP supports cancelling a deployment as long as the deployment is not already at a Completed or Failed terminal status.

Why whould I want to do this?

- If the deployment was requested in error.

- If the deployment appears to be stuck, and you wish to cancel it to troubleshoot.

How to cancel a deployment

Click on the Cancel button on the Deployment List or in Deployment Detail. The deployment will:

- stop any existing phases in a 'Started' phase

- mark the remaining child phases as "cancelled" if they are in a "new" status.

- move the Deployment to a cancelled phase and send a notification to the service's slack channel.

Last Updated: 2024-07-01T19:32:00+0000

Deployment List

This feature lists each of the deployments for a given service. On this list you can:

- cancel a deployment. See Cancelling Deployments

- click to view the deployment details page

- see a phase summary. As a deployment contains multiple phases, this summararizes the phases, grouping by phase state.

- see the Deployment Status

- see the Platform the Deployment was requested for.

- see when the Deployment was created and updated.

Last Updated: 2024-07-01T19:32:00+0000

Deployment Statuses

New

This status means your deployment is created, but no phases have begun deploying.

Scanning

Your artifacts are automatically scanned when your build completes This status means DCP is waiting for your artifacts to complete Scanning.

Scanned

This status means your artifact has completed scanning.

Started

This status means one or more phases have begun deploying.

Completed

This is a terminal status that means your deployment was successful.

Failed

This is a terminal status that means your deployment was failed.

Cancelled

This is a terminal status that means your deployment was cancelled by a human or an approval timed out.

Last Updated: 2024-07-01T19:32:00+0000

Last Updated: 2024-07-01T19:32:00+0000

About Phases

What is a Phase? A deployment will contain multiple phases (some call these stages). A phase describes the releases to an environment.

Our model for DCP is structured this way:

- Deployment

- Phase(s) - one or more per environment

- Release(s) - per cluster

- Phase(s) - one or more per environment

A phase has:

- Prerequisite rules that are enforced before deloyments

- May have Checks that an artifact version has been deployed to a prior environment. See Phase Checks

- May have Approvals for each pre-requisite (who approved/rejected, timestamps, etc). See Phase Approvals

- The GUS case used for the approval - for this environment

- The status which includes the status of its child releases. For example, if any release is failed, the phase will be marked as failed.

You can see the phases for a deployment on the Deployment Detail page.

Related topics

Last Updated: 2024-07-01T19:32:00+0000

Checks Before a Phase Begins

About Checks

An environment may require that a phase satisfies one or more rules before they start. Each environment (kdev, kstg, kprod, etc) can be configured in DCP to have one or more checks.

- An artifact version must have already successfully deployed to a prior environment. E.g. deployments to KProd must have first been deployed to KStg.

- future ideas: checking whether a change moratium is in place.

These checks are evaluated before any phase approvals are processed.

When a check fails, the deployment is immediately cancelled. You can refer to the deployment phase's Status Detail for detailed information on cancellation reason.

Related topics

Last Updated: 2024-07-01T19:32:00+0000

Approving a phase

About Approvals

An environment may require that phases provide various approvals before they execute, the are:

- A pre-approved GUS case

- A manually-approved GUS case

- A human approval

Detailed Rule Descriptions

-

A pre-approved GUS case. DCP creates a GUS case and adds it to your team's case list. The case is automatically set to a 'scheduled-approved' state. While you may see the phase transition through this Approving state, there is no action required by the user.

- Used when: phase is being sent to a Commercial Production environment

-

A manually-approved GUS case. DCP creates a GUS case and adds it to your team's case list. The phase remains in the Approving state until a user moves the case to 'scheduled-approved' state or the approval period times out. We repeatedly check the gus case for approval every 60 minutes.

- if the case is Approved, the phase moves to start deployment or the next approval.

- if the approval execeed its timeout of 14 days, the entire deployment is cancelled.

- if the approval is rejected, the entire deployment is cancelled.

- Used when: phase is being sent to a Government Production environment, we will create the change case in new status and attach the lower environment test results. You will need to update the change type, change risk, set the deploy window, and submit for approvals. You will be notified via standard GUS notifications. Once the change case is in “scheduled-approved” status and is marked as Approved. The Phase continues to evaluate any further approvals and begins deploying when all approvals are met.

-

A human approval. If your Deploy Strategy is 'continuous-delivery' and the environment is configured to wait on this approval, the user will be prompted to Approve or Reject the phase before it will progress. Approvals and Rejections are logged in DCP for audit purposes.

- If the phase is Approved, the phase will progress to begin deploying, or the next approval, based on configuration.

- If the phase is Rejected, the phase will be cancelled and the deployment will end.

Related topics

Last Updated: 2024-07-01T19:32:00+0000

Deployment Statuses

Each status will soon raise a corresponding Event Bus event. Currently only PhaseStarted, PhaseCompleted, and PhaseFailed are suppored.

New

This is a status that means your phase is created.

Approving

This is a status that means one your phase requires approval. Depending on the type of approval, DCP may be waiting on a GUS case approval or a human approval on the phase. See About Phase Approval

Approved

This is a status that means your phase was was approved. If there are further approvals, the phase will return to the Approving status until each phase approval requirement is met.

Starting

This is a status that means the phase is queued to deploy. We have send the deployment payload to our Argo Workflow and it may be queued there. Once Argo begins processing the deployment phase, it will move to a Started state.

Started

This is a status that means your phase has begun deploying. You will begin to see your Releases move through their own deploy statuses. When all phases are complete, the deployment is complete.

Completed

This is a terminal status that means your phase was successful.

Failed

This is a terminal status that means your phase was failed. We try to write some information to the Status Details field.

Cancelled

This is a terminal status that means your deployment was cancelled by a human or an approval timed out. We try to write some information to the Status Details field.

More Topics

Last Updated: 2024-07-01T19:32:00+0000

Phase Events

The following events are published to the ProdEng Event Bus (PEEB), so that the enterprise can subscribe and react to these events for customized business logic, such as sending slack notifications, emails or Pager Duty pages.

- DeploymentPhaseStarted - when a Phase has begun deploying to an environment. DCP notifies the team slack channel, if configured.

- DeploymentPhaseCompleted - raised when a phase has completed. DCP notifies the team slack channel, if configured.

- DeploymentPhaseFailed - raised when a Phase has failed. DCP notifies the team slack channel, if configured.

- DeploymentPhaseApproved - raised when a requested approval has approved.

- DeploymentPhaseCancelled - raised when a requested approval has been cancelled.

Future events

- DeploymentPhaseApproving - called for each environmental approval required.

- DeploymentCreated, DeploymentCompleted, DeploymentFailed, DeploymentCancelled

- ReleaseStarted, ReleaseCompleted, ReleaseFailed

Last Updated: 2024-07-01T19:32:00+0000

Release Statuses

New

This is a status that means your release is created in our database.

Started

This is a status means that the release has begun deploying.

Completed

This is a terminal status means that the release is successful.

Failed

This is a terminal status means that the release is failed.

Cancelled

This is a terminal status means that the release is cancelled.

Last Updated: 2024-07-01T19:32:00+0000

Phase Status Technical Workflows

Overview

The DCP Data Access Service (DAS) uses a synchronous state management system. This design choice was for the short-term and offers simplicity and speed-to-market. However, it also is much too tightly coupled and does not scale as well as an async state system. We describe this as synchronous because the one state handler is responsible for actioning the state to the next system. For example, the /approve callback is responsible for the logic needed to action to Starting. This unnecessarily blurs logic between states.

In the near future, after GA, when scale becomes an issue, we will make the state handling more asynchronous with queues and listenersthat listen for states. For example, the Starting listener will listen for items in an Approved State, and perform the logic needed to action to Starting. This allows us accomodate a state that takes longer by adding more listeners and scaling horizontally

Details

Note: when a phase begins by starting or approving, the parent Deployment is set to its "Started" state.

- deployment phase created, state set to NEW

- in the future, we will raise a 'Created' event.

- inititalize (set aproving or starting)

- evaluate any checks for the Phase's environment

- if pre-requisites are unmet for the Phase's environment, state is set to Approving. If all pre-requisites are approved or none exist, state is set to Starting.

- If the phase is kgstg or kprod, the phase will be scheduled, even if scheduledAt is current time (gusCaseAuto)

- Approving

- submits approval workkflow (human, gus case, etc)

- waits for approval or rejection.

- Argo workflow creates case with Gus CLI and then calls back to DCP to set the case Id, risk, and scheduledAt

- Approved

- workflow calls back to /approve route when the approval is approved or rejected

- if approved

- Approved event emitted

- immediately begin "starting step" or sets to startScheduled

- begin "starting step" logic if state is not startScheduled

- if approval is rejected,

- Cancel event emitted

- see Cancel logic below.

- Starting

- if a gov env, check that the scheduledAt UTC time is after current UTC time, cancels phase if not.

- if state = starting, submits deploy workflow

- Starting or StartScheduled event emitted

- StartScheduled

- when a gov phase, we may need to start the phase after the ScheduledAt time, so we can support release windows.

- periodically, we will query for phases in this state and start them when ready.

- Started

- workflow calls back to say work is beginning

- Started event emitted

- Completed event emitted

- Completed

- workflow calls back to say work is successful

- Completed event emitted

- complete deploy set if all phases done

- start next deployment phase

- Failed

- event emitted (handled)

- fail deploy set if not already failed

- Cancelled

- cancel deploy set if not already cancelled

- event emitted (? handled)

More Topics

Last Updated: 2024-07-01T19:32:00+0000

DCP Observability

Logging

Logs are currently captured from the pods in the EKS cluster by the core-paas-sumologic solution which is managed by the Core PaaS Team and are shipped out to a MonC instance of Splunk.

Splunk query should be structured using the following:

index=mulesoftkubernetes.namespace_name=deploy(Or whatever namespace you're looking at debugging)kenv=kdeploy.dev(Or whatever environment you're debugging)

For further information on search syntax in Splunk visit this doc.

Links:

Metrics

RDS Metrics

The refinery controller gathers Postgres metrics and forwards onto Cloudwatch. In order for refinery to work a monc_ro_user service account

needs to be created on each managed RDS instance. To create the monc_ro_user do the following:

- Exec to a container from a cluster that has access to your RDS instance.

kubectl exec -it $POD -- /bin/bash

- Connect to the RDS instance. In this example we're using

dcp_database, update your FQDN as needed.

psql -h dcp-database.cfunxlmfcmct.us-west-2.rds.amazonaws.com -p 5432 -U dcp_user -d dcp_database

- Create the

monc_ro_user. See password in kilonova-envs-config

create user monc_ro_user login password '$RO_PASS' valid until 'infinity'; grant select on pg_stat_database to monc_ro_user;

-

Validate metrics and the observability service. Redeploy the

core-paas-observability-servicevia core-paas-deploy jenkins job. -

Core Paas Observability: https://github.com/mulesoft/core-paas-observability-service

-

Refinery Documentation: https://salesforce.quip.com/9seNA5ce5a7Y

Last Updated: 2024-07-01T19:32:00+0000